Automating & evaluating load testing with Locust and Keptn

When it comes to evaluating the quality of your software, there is usually no way around automated load tests. Locust has proven to be a mature tool to swarm your system with millions of simultaneous users to test your applications.

However, while the definition and execution of tests can be automated with Locust, the evaluation of continuous performance tests is still a big challenge in organizations. Instead of tediously scripting evaluations for performance tests, we are going to learn how Keptn is leveraged to trigger and evaluate Locust tests based on configuration, not scripts. We’ll have a look at service-level objectives and how a couple of lines of process definition can help us automate the evaluation of load tests.

Before we are going into any details, let’s start with the basics!

What is Locust?

Locust is an easy-to-use, scriptable, and scalable performance testing tool. It allows you to define your users’ behavior in regular (Python) code, instead of using a UI or domain-specific language. Thus, it follows the “everything-as-code” approach, allowing all the tests to be stored and versioned by putting them along with your application code in your code repository. With Locust, you can test any system or protocol, e.g., HTTP, WebSockets, Postgres, and even more. It can even be modified and extended.

However, for this blog, we will focus on Locust’s basic capabilities for now. If you want to learn more, have a look at an introduction to Locust by Lars Holmberg.

Load testing with Locust

Let’s have a quick look at a Locust test definition to learn how a test definition works in Locust. In the following example, we create a WebsiteUser that will simulate traffic to the root path of our web application, i.e., the “/” endpoint. In a second task, a sample shopping cart endpoint “/carts/1” is queried. Between each task, the user will wait between 5 and 15 seconds.

Of course, this is a very simple example, but it will give us an idea of how Locust tests are defined for how simulated users are accessing which endpoints of your service. There are multiple options for concurrency, failure handling, scaling, and more. Once tests are defined, they can be run via the locust command (or optionally the Locust web interface) to spawn and simulate multiple users.

Running you load tests can be done either ad-hoc which is great for developers to execute the scripts on their local developer machine and analyze the results themselves. However, analyzing test results and comparing them across multiple versions might not be feasible with this approach. Therefore, in larger organizations, load tests need to be part of a continuous delivery process.

The reasons for that are multiple: firstly, load tests have to be part of a process so they can not be skipped or forgotten, secondly, it has to be made sure that identical test runs are done via multiple builds of a software system to allow comparison, thirdly, reporting has to be built-in to provide the basis for deciding if a build is allowed to go into production.

Now, let’s have a look at how we can integrate Locust in a CD process by the example of Keptn — that even comes with a way to continuously evaluate the performance of our applications.

Continuous Performance Verification with Locust + Keptn

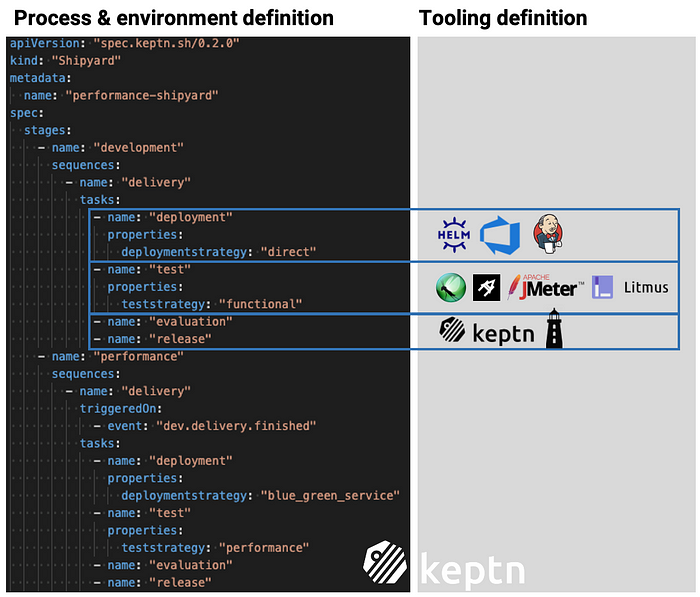

To truly provide continuous performance verification and even performance verification “as-a-self-service”, performance testing plus the evaluation thereof must be integrated into the CD process. In Keptn, this process is defined via the so-called shipyard file, and therefore dedicated testing and evaluation tasks can be added to any sequence, such as a “delivery sequence”. For the testing, we are going to use Locust, while for the evaluation task, we are going to use Keptn’s built-in capabilities to evaluate the quality of an application or microservice, based on Service-Level Objectives (SLOs). Let’s have a look at the example below.

As you can see, we’ve defined a multi-stage environment with a development and performance environment. Looking at our development stage we’ve defined a “test” task that will trigger “functional” tests. Once these tests are finished, an automatic evaluation is performed and only if this is successful, the new build will be released into the next stage, which in this example is our performance environment.

In the performance environment, “performance” tests are triggered and once they are finished, another evaluation in terms of a Keptn quality gate is executed.

As you might have already seen, the shipyard definition defines the process, while the tooling itself is not defined in the same file. In fact, the tooling is defined in the Keptn’s uniform: with this, we can dynamically manage the tool integrations for our CD process and can change or switch tools without the need to adjust the process definition.

Another crucial part of a CD process is the evaluation of the application’s quality to determine if it is ready to be shipped into production. For this, we are going to leverage Keptn’s built-in quality gating capabilities. In the following screenshot, we can see how each new build has been evaluated by Keptn’s quality gates and a total score based on SLOs has been generated for each build. With this, we decide if a new build should go into production or not and even render a trend regarding the evaluation score over time.

Leveraging Service Level Objectives (SLOs) for performance evaluations

Running the load tests as part of the CD process is the first step of continuous performance verification. The second step is to automatically verify and evaluate the results of the load tests. Therefore, Keptn comes with a comprehensive way to define the quality of applications (existing of either monoliths or hundreds of microservices) in terms of service-level objectives (SLOs). This allows to declaratively define the criteria of any service level indicators (SLIs) such as response time, error or failure rate, throughput, or even utilization of CPU or network or any other metric that is important in your organization.

In the following example, we have defined an SLO file that validates the response time for the 50 and 95 percentile to stay lower than 200ms and within a maximum increase of 10% to the previous runs.

Please note that this is just an example and, of course, can and should be extended for production usage. One other important aspect is that in this definition file, there is no vendor-specific code, i.e., the actual data provider for the response time, error rate, or other metrics is again defined in the concept of the Keptn uniform to allow focussing on the quality aspect and not blurring this with vendor-specific API calls or queries. Keptn supports Prometheus, Dynatrace, and Neoload data out-of-the-box and others can be added as well.

Bonus: Performance Verification as a Self-service

In addition to integrating performance verification as part of the continuous delivery process, developers can make use of this capability in a “self-service” manner. How? Let’s reuse the principle of the shipyard definition to create a dedicated “self-service” stage, e.g., performance-testing, without release or promotion into any other stage or environment. With this, developers can directly deploy into the performance-testing stage, have Keptn trigger the Locust tests, and perform the evaluation. The result can then be pulled via the Keptn CLI or API or even pushed directly to the developer via a notification tool such as Slack or Microsoft Teams.

The image below shows this use case: first, the user triggers Keptn which starts to deploy a new build into a test environment. Next, tests execution is triggered, and once finished, the data in terms of SLIs is collected. With this data, Keptn evaluates the SLOs and reports the result back to the user.

Getting started!

In this blog, we’ve learned how you can use Locust and Keptn to automate load testing and to evaluate the applications under test with SLOs. All it takes is a locustfile for the definition of the files, as well as Keptn with its declarative shipyard file (process definition) and SLOs (quality definition). Get started yourself by learning more about Locust and Keptn in this joint presentation of Locust and Keptn maintainers on how to integrate Locust and Keptn. Find the Locust integration for Keptn in our integrations repository.